In today’s fast-paced, digitally-driven business world, cloud computing has become the foundation of scalable and flexible IT infrastructure. As organizations transition to the cloud to gain agility, scalability, and cost savings, it becomes crucial to monitor cloud environments rigorously. This ensures performance, security, and reliability. That’s why having a good cloud monitoring system, like Icinga, is critical for cloud operations.

Cloud monitoring architecture involves the tools, processes, and technologies used to track the health, performance, and usage of cloud-based resources. With this in place, organizations can identify issues early, optimize resource use, and maintain uptime for critical applications. However, what exactly does a cloud monitoring architecture look like? And how does it function in practice? In this post, we will explore the structure, components, and best practices of a solid cloud monitoring architecture.

Why Cloud Monitoring Matters

Before diving into the architecture itself, it’s essential to understand why cloud monitoring is so critical to businesses:

- First and foremost, monitoring helps ensure cloud resources perform optimally, detecting service degradation early, which allows teams to act quickly.

- Furthermore, it aids in cost efficiency. By tracking resource usage, businesses avoid over-provisioning or underutilizing their cloud infrastructure.

- In addition, monitoring alerts teams about potential security breaches, misconfigurations, or unauthorized access, making it crucial for security and compliance.

- Finally, monitoring prevents unexpected downtime and ensures that critical applications stay online, guaranteeing availability.

Key Components of Cloud Monitoring Architecture

Data Collection Layer

The data collection layer is the foundation of cloud monitoring architecture. Here, data is gathered from various sources across the cloud environment. This data typically includes:

- Metrics: Quantitative data, such as CPU usage, memory consumption, network latency, and disk I/O. These metrics help monitor system performance and resource allocation.

- Logs: Logs provide detailed information about activities or errors within the cloud environment. They include application logs, system logs, and audit logs, thus offering a full picture of system health.

- Events: Event-based data captures specific actions (e.g., virtual machine restarts, storage provisioning, or security alerts). As a result, events help detect issues in real time.

- Traces: Distributed tracing is critical for monitoring microservices, revealing the performance and health of services across multiple regions and nodes.

Data collection agents, such as Icinga’s monitoring agents gather this data from cloud resources. Consequently, the data is ready for further analysis.

Data Aggregation Layer

Once collected, the data moves to the data aggregation layer, where it is consolidated and normalized. This ensures data from various sources can be centrally processed, making it easier to derive meaningful insights.

In a typical cloud environment, there may be numerous microservices, containers, and virtual machines, each generating logs and metrics. Aggregating data in one place is essential. This can be achieved using:

- Time-series databases (e.g., Prometheus, InfluxDB) for storing metrics over time, which aids in analyzing and reporting trends.

- Log aggregators (e.g., ELK Stack – Elasticsearch, Logstash, Kibana) for real-time search and visualization of logs from multiple sources.

- Event stream processors (e.g., Apache Kafka, AWS Kinesis) to ingest and aggregate data in real-time from diverse sources.

Ultimately, this layer ensures that all data is available in a standardized format, prepared for further analysis.

Icinga’s aggregation capabilities also integrates well with other time-series databases or log management tools to enrich data aggregation.

Data Analysis and Correlation Layer

The data analysis and correlation layer applies algorithms and machine learning techniques to identify patterns, anomalies, and correlations in the data. This layer is essential for troubleshooting and identifying root causes more efficiently.

Here’s how this layer works:

- Threshold-based Alerting: Simple rules can trigger alerts when metrics surpass or fall below predefined thresholds (e.g., CPU utilization above 90%). Thus, teams are notified promptly when issues arise.

- Anomaly Detection: Advanced algorithms detect unusual patterns or trends that signal potential problems before they escalate. Consequently, teams can proactively manage infrastructure instead of reacting to crises.

- Root Cause Analysis: Correlating data from various sources helps pinpoint the root cause of issues. For example, if an application experiences delays, analysis might identify network latency or a failing service as the cause.

Some tools also use AI and machine learning to predict future issues based on past data, but Icinga’s powerful rule-based engine remains a highly effective tool for real-time data analysis and correlation.

Visualization and Dashboard Layer

The visualization and dashboard layer makes monitoring data easy to interpret, enabling teams to make informed decisions quickly. Well-designed dashboards are crucial for presenting real-time data and trends.

- First of all, customizable dashboards allow users to build dashboards tailored to their needs, displaying relevant metrics like system uptime, error rates, or response times.

- Moreover, dashboards can display real-time alerts, which help teams respond promptly to incidents.

- Additionally, historical data visualization enables teams to analyze past data, spot trends, or identify recurring issues, allowing for long-term planning.

- Finally, tools like heat maps and topology maps show the health and relationships of individual components within the system, helping to spot potential trouble areas.

Popular tools like Grafana, Datadog, and AWS CloudWatch offer advanced visualization capabilities, allowing users to tailor dashboards according to their specific needs.

With Icinga Web 2, users get a real-time visual representation of the monitoring data, empowering teams to act on critical insights quickly.

Alerting and Incident Management Layer

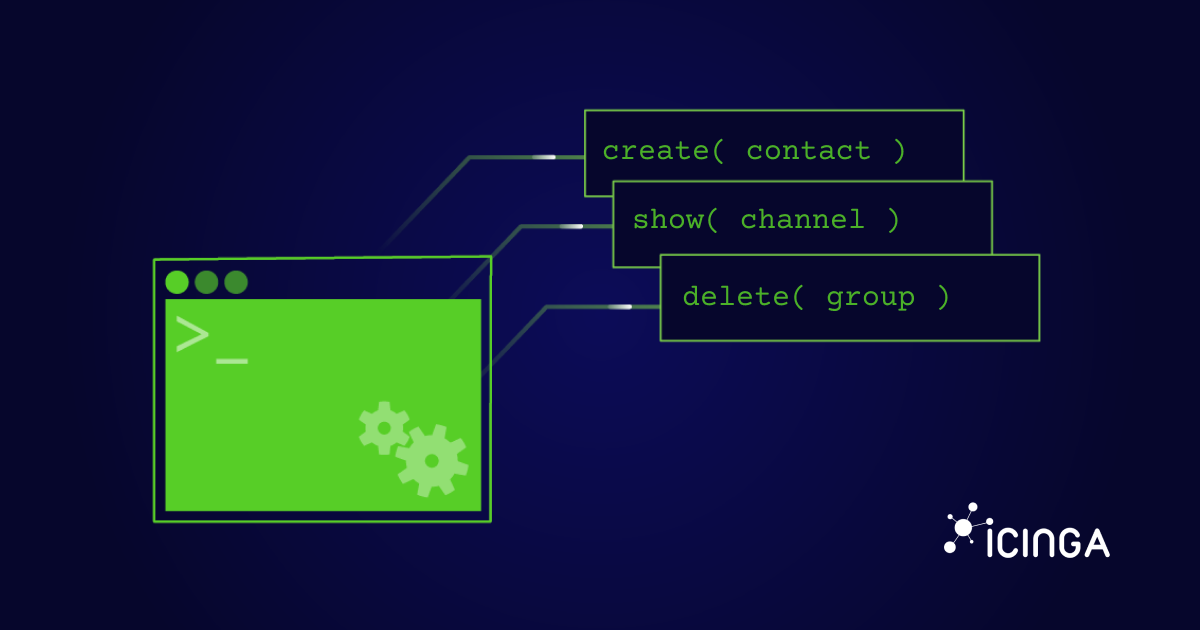

Monitoring data must be actionable to provide value. The alerting and incident management layer ensures that when specific conditions occur (e.g., server CPU exceeds 85% utilization), alerts are triggered and sent to the appropriate personnel.

This layer integrates with Icinga’s notification system, which supports email, SMS, and custom alert methods to inform the appropriate team members. Integration with incident management platforms like PagerDuty, OpsGenie, or ServiceNow allows for advanced alerting workflows.

- Email or SMS: Ideal for low-severity incidents.

- Push Notifications: These provide immediate alerts for critical issues.

- On-call Schedules and Escalation Policies: If an alert goes unacknowledged, it escalates to other team members, ensuring no incident is overlooked.

Therefore, this layer ensures that teams can respond efficiently to any incident, minimizing downtime and impact on business operations.

Security and Compliance Monitoring

Security is vital in any cloud environment. The security monitoring layer focuses on ensuring the infrastructure is secure, compliant with regulations, and protected from threats. Security monitoring provides real-time alerts for:

- Unauthorized Access Attempts

- Misconfigurations (e.g., public S3 buckets in AWS)

- DDoS Attacks or Unusual Traffic Patterns

Icinga can monitor security aspects of the cloud environment. It integrates with security tools like OSSEC for intrusion detection or with compliance monitoring tools to ensure that the cloud environment adheres to security standards such as GDPR, HIPAA, or PCI-DSS.

In the end, security monitoring is crucial to protect sensitive data and maintain regulatory compliance.

Dynamic Scaling and Monitoring in Cloud Environments

Cloud environments bring unique challenges to monitoring, especially due to dynamic scaling and ephemeral resources. Unlike traditional infrastructures, cloud resources scale automatically based on demand, and resources like containers and serverless functions can be short-lived. Monitoring in such environments requires a flexible system that adapts in real-time.

Auto-Scaling and Elasticity

Cloud platforms often use auto-scaling groups to adjust resource availability. Monitoring tools like Icinga need to dynamically detect and track new instances or containers as they are created or removed. Integrating Icinga with cloud services ensures that no resource is left unmonitored, even during rapid scaling events.

Ephemeral Resources

In cloud-native environments, many resources only exist for a short time. Icinga’s ability to discover and monitor these short-lived resources ensures that even transient containers or VMs are tracked throughout their lifecycle, preventing gaps in visibility.

Multi-Cloud and Hybrid Monitoring

With many organizations adopting multi-cloud strategies, it’s crucial that monitoring tools support multiple cloud platforms. Icinga’s distributed monitoring allows you to monitor services across different providers, ensuring a unified view of both cloud and on-prem infrastructure.

Cloud-Specific Metrics

Monitoring cloud-native metrics like IOPS, API request limits, and network throughput is essential. Icinga can track these metrics, providing actionable insights that help optimize resource usage and prevent performance issues.

Cost Optimization

By monitoring resource consumption, Icinga helps you correlate performance with costs. This ensures you’re not over-provisioning resources, leading to more cost-effective cloud usage.

In cloud environments, monitoring must be as dynamic as the infrastructure itself, and Icinga provides the flexibility needed to stay ahead of changes.

Conclusion

In conclusion, a cloud monitoring architecture is a crucial component of any organization’s cloud strategy. By implementing a robust monitoring solution like Icinga, businesses can gain valuable real-time insights into the health, performance, and security of their cloud systems. This enables them to maintain operational continuity, optimise resources and ensure security.