This is a guest blogpost by Adam Sweet from the Icinga Partner Transitiv Technologies.

Since this is a longer post, we added a tl;dr at the end.

For many, host and application monitoring is an afterthought at the end of a project. Some people don’t think about monitoring at all until a few failures go unnoticed and a customer or end-user calls to ask why something isn’t working.

We always recommend monitoring be considered an integral component of any implementation project and if you don’t have any at all, then some monitoring is better than where you are right now. Just adding monitoring for a few hosts and HTTP services should get you to the point where you know about a failure before your users call, but it won’t tell you what the cause of the failure was, allow you to see problems developing before they cause downtime, or understand how your applications are performing. So, how do you work out what to monitor to reach monitoring nirvana?

Monitoring Mindset

Setting up monitoring, especially from scratch for a large estate can seem a mammoth task at the outset, but starts to look easier as you begin to break it down.

Start by identifying your most important applications and services. If your key infrastructure were wiped out overnight, what would you need to build first to keep your organisation afloat and then get back on its feet?

At least initially, it might be your company website, phone systems or ticketing system so you can take orders, or provide your users with the service they’re paying for. However, saying the website, email, phones, VMware, AWS and the network isn’t enough. What about them will need to be monitored so you can be sure they’re working? We need to break each service or function down into the components that provide them.

Using your website as an example, although you could just run an HTTP check against the front-end server, and doing so is better than nothing, it may well be more than just a single host. It might be a few web servers with a load-balancer in front and a database back end. Add all of those hosts and the applications they serve to your list of things to implement first.

Monitor those and you won’t be caught out by a call asking whether something is down anymore.

Base Platform Metrics

No matter how many device types and hardware platforms you have in your environment, most will have many of the same metrics that are of interest. CPU usage, memory usage, disk usage, perhaps disk I/O and NIC or switchport bandwidth usage, though how you retrieve them may differ based on the OS or hardware platform.

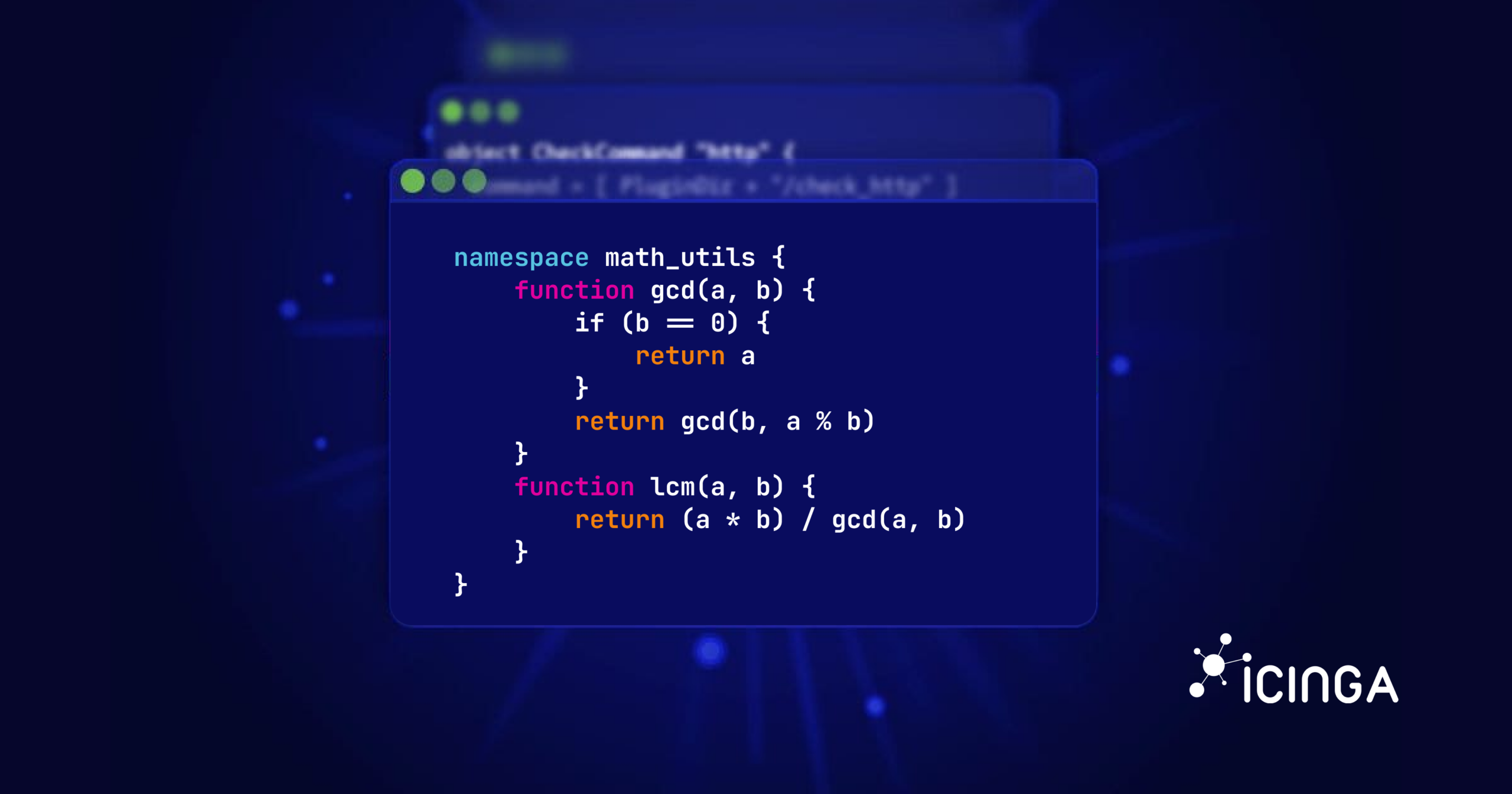

Using custom variables to specify the OS, hardware vendor or hardware model in your Icinga host objects allows you to use Icinga Service Apply rules and create monitoring for all of those physical resource metrics for every host running that OS or platform.

For a Windows specific CPU usage check, you can create a Service Apply rule that automatically applies the Windows CPU service to any host where the host’s OS variable is set to Windows. Likewise memory and disk usage etc. That way, every time you add a Windows host, it gets all the standard Windows resource checks automatically.

Likewise for Linux, Cisco and Juniper etc. You can even give the services the same display names regardless of the host’s OS, so you know when you want to look at the memory usage on devices, the service is always called Memory Usage.

Ideally you’d do this from the outset while implementing your initial monitoring, it’s much easier to include it at the beginning than to add it later. But, if you’re in a hurry to get some monitoring up when you have none, or you’re still grappling with the concepts, then once your urgent hosts and services are in, start adding in common OS and platform metrics using host variables and Service Apply rules. You’ll get an enormous amount of monitoring coverage effectively for free every time you add new hosts.

With the high priority application and OS/hardware base level monitoring now covered, we’ve gone from unknown failures like end users reporting the website is down, to being aware that the database process crashed when the OS ran out of memory, so you can get on to preventative maintenance. Consequently you will spend less time fighting fires and your support workload should decrease.

Thinking About Detail

To get to the next level we need to think about what is it about each application, service and hardware component that needs to be monitored. What would you do if you had to check an application issue manually?

The end goal is to monitor your applications and infrastructure inside out. That might include:

- Process checks (are there enough but not too many?)

- Windows service or Linux systemd unit statuses

- Network ports are reachable and listening

- HTTP(S) or other protocol responses

- Output file age and size

- Log file age and size

- Eventlog or log file errors

- Application memory usage

- Application API queries

- Cloud vendor API queries

- Bandwidth usage

- SQL queries

- WMI and WinRM metrics

- Disk, PSU, fan and chassis statuses

- UPS status, load, voltages, battery health and runtime

- SNMP metrics for your network devices and storage appliances

- SNMP traps

- TLS certificate validity and expiry dates

- Container and Kubernetes status

- Number of cloud instances

- Cloud storage sizes

- Cloud hosting spend

For application monitoring, custom variables and Service Apply rules allow you can create a set of standardised services for each application and apply them to every host which runs that application, just as you did for physical resource metrics. Define each one once and you can inherit them for free, forever.

For each OS, device type and application, is that everything you need to know to be sure it’s OK? Is there anything else you would check manually? If so, add that to your list.

All of your hardware platforms have different properties and hardware which can be monitored but are unique to the platform, such as VMware, Dell OpenManage, HPE Insight Manager or OneView, UPS management software and the SNMP specifics for each different network equipment vendor. Things like disk, RAID controller, PSU, fan health and chassis status. Adding host variables for each hardware or vendor platform allows you to use Service Apply rules for monitoring the hardware health of each one and again, you’ll essentially get that platform monitoring for free each time you add a new host with the necessary host variables set.

If something goes wrong and your monitoring didn’t see it, add monitoring to cover that failure scenario and any downstream consequences. Your monitoring should constantly evolve.

By monitoring all of your application and hardware specifics, you should know when anything at all goes wrong, so you can fix it before it causes bigger problems.

Performance Observability

By now we should know whether our applications are up, running and producing the outputs we expect, but how are they performing?

- How many requests or transactions are they handling per second?

- Are there any unusual spikes or drop-offs?

- How long does it take to respond to certain requests/queries?

- Are there any unusually long running ones?

- Has CPU or RAM usage suddenly gone up while the number of requests or transactions remains the same or has dropped?

- Has the number of cloud compute instances suddenly gone up while the number of requests or transactions remains the same or has dropped?

- How does response latency change as request frequency increases?

These metrics, or telemetry will have to be implemented by your software vendor, or your developers will instrument your software products to record them. There’s a great talk by Coda Hale on implementing metrics in your software.

How you retrieve them is down to how they were implemented, but using a telemetry web API like OpenTelemetry is pretty common practice these days and Java applications can use JMX.

Without specific application telemetry, you’ll have to run SQL queries, query the database engine and web server stats, parse log files or use some other means of crunching the raw data that already exists so you can collect, process and measure metrics of interest, but you may not get the same level of application introspection.

Monitoring the internal performance counters for your applications is the level we should aspire to.

Checking Your Services

When using Icinga as an agent for your server OSes and SNMP for network devices and storage appliances, defining your hosts and the necessary services using check commands from the Icinga Template Library will get you most of the way there.

If you need to monitor things that aren’t covered by the ITL, there are a number of places you can look for check plugins to complete your coverage, such as Icinga Exchange.

If you can’t find a monitoring plugin that meets your needs and you don’t have development services in house, as official Icinga Partners our team at Transitiv Technologies can help you.

Examples of custom development we have provided for clients in the past include:

- Implementing custom monitoring plugins and integrating them with Icinga 2.

- Creating tools to migrate configuration from an existing Icinga 1 or Nagios environment into Icinga 2.

- Implementing Ansible roles to automate Icinga 2 agent installation and registration with the Icinga Director self-service API.

- Creating custom dashboards using Grafana and Dashing.

- Creating custom graph templates for Graphite and PNP4Nagios.

So feel free to contact us!

Time Based Monitoring and Alerting

To the side of what you monitor is when you want to monitor things. 9-5, 24/7 or many different time windows for many different things? Icinga is very flexible when it comes to monitoring thousands of things in different time windows and it’s trivial to configure.

Another consideration is who gets notified by monitoring alerts, when and how? Do you want people to get out of bed at 4am? Are you paying them to? Do you have an Ops team watching screens 24/7, or do your engineers respond to notifications overnight? Do you want to notify them by email, SMS, Slack etc? What happens if the on-call engineer doesn’t respond?

Having a clear idea of what needs to be monitored and when, how you want to alert people when things go wrong and when people need to respond allows you to bake that in from the beginning and use it for everything, rather than trying to retrofit it later. It can save you money on out of hours call-outs.

Business Processes

Icinga Business Process Modelling allows you define business processes, like your website, email, phone system and other IT services, show whether they are working normally, degraded or down and visualise their overall statuses in a dashboard.

Those business processes can be comprised of many Icinga services with logic applied to model their impact on the overall business process status. A dashboard that indicates the level of concern is much better than having the big boss cruise by at 16:59 on a Friday and ask you why one of the database servers has a red alert on it, then insist you come in over the weekend to compress the database storage volumes on all nodes, or rebuild the storage array with bigger disks, when it’s just one of the secondary nodes in the cluster and a database backup has tipped the disk usage 3% over threshold until it’s moved off to archive storage.

Yawn. TL;DR

If you concentrate on the most important services and the things that go wrong most frequently, you’ll get to the point where you know when something goes down.

Move on to the broad brush strokes and get all the hardware and OS resource metrics in so you can intercept developing issues and perform preventative maintenance before they cause real problems.

What applications do you have? Databases, web servers etc. What metrics are common to each application? Create detailed sets of monitoring services for each application and apply them to every host that runs those applications.

How you will know something like your website, ticketing system or sales platform is working? What do you look at when you need to check they’re OK, or investigate an issue? Monitor for it.

What hardware health information can you get from your hardware vendor’s management platform? Are the fans, PSUs, RAID arrays and UPSes all working and their firmware up to date?

What performance metrics can you retrieve from your applications? Do you have to instrument them in your own software or has your vendor done so? Can a vendor tell you what to look at to evaluate performance and what values would they expect to see?

When should each host and service be checked? Who will be notified, when and how?

If you monitor the basics, in the event of a problem you will know what the problem is and what it isn’t quite quickly.

Build on top of the basics until you have every component of your stack monitored to the point where if anything goes wrong, you know exactly what it is straight away. It will take time.

If you monitor application performance metrics, then you will have a far better idea of what is normal, what’s an abnormal and what behaviour to expect in various load and resource conditions. You’ll also see which components or implementation details are holding performance back.

Get this far and you’ll have reached monitoring nirvana.

This was a guest blogpost by Adam Sweet from the Icinga Partner Transitiv Technologies.