Today’s blog post dives into the internals of Icinga 2 and will give you an overview how the config synchronization works internally. We will take a small cluster as an example and follow the configuration files through the synchronization mechanism.

We assume some familiarity with distributed Icinga 2 setups as this post will not go into details on how to set up an Icinga 2 cluster. So if you want to replicate the examples, please check out the installation and distributed monitoring documentation for that.

Test Setup

To show the config sync by example, we will use a cluster consisting of a total of five nodes: one master node and two satellites in separate zones. Each satellite has an agent connected. One of these agents will use the command endpoint feature. Don’t worry if you don’t know this feature, we will get into this later.

Syncing Configuration

So let’s get started with a mostly empty zones.d/ directory on the master node:

/etc/icinga2/zones.d/

|-- agent-1/

|-- agent-2/

|-- global-templates/

|-- master/

| |-- satellite-1.conf

| `-- satellite-2.conf

|-- satellite-1/

| `-- agent-1.conf

`-- satellite-2/

`-- agent-2.conf

As you can see, we have six zones in total, one called global-templates which will be synced to every node. The remaining five zones are one for each node described earlier. The four already existing configuration files each contain an endpoint and a zone object for the node given in the file name. At this point, no host or service objects are defined anywhere.

Global Zones

Let’s start simple by adding generic-host and generic-serivce templates to the zone global-templates:

root@master:~# cat > /etc/icinga2/zones.d/global-templates/templates.conf <<EOF

template Host "generic-host" {

max_check_attempts = 3

check_interval = 30s

retry_interval = 10s

check_command = "hostalive"

}

template Service "generic-service" {

max_check_attempts = 5

check_interval = 30s

retry_interval = 10s

}

EOF

When you tell Icinga 2 to reload its configuration, for example using systemctl reload icinga2, you won’t notice much. However, if the cluster is healthy, quite a few things happened under the hood. The first thing the master node does is to copy the new configuration to the directory /var/lib/icinga2/api/zones/global-templates/:

/var/lib/icinga2/api/zones/global-templates/

`-- _etc/

`-- templates.conf

As you can see, our newly created templates.conf file ended up there. And since this is a global zone, it is synced to every single node in the cluster (as long as the zone is configured on each node) and this directory should appear on each node.

Adding Hosts and Services

The questions “What to put where?” and “Where will it end up?” get slightly more interesting once we consider hosts and services that should not go into a global zone. Here it makes a difference whether the agent is a command endpoint or schedules checks by itself.

Agent with Local Scheduling

In order to be able to schedule and execute checks locally, the agent must be aware of its host and service definitions. Therefore, these have to go into its own zone. We will set this up for our agent-1 which is a child of satellite-1, so we put a host and service for agent-1 into its zone:

root@master:~# cat > /etc/icinga2/zones.d/agent-1/agent-1.conf <<EOF

object Host "agent-1" {

import "generic-host"

address = "agent-1"

}

object Service "icinga" {

import "generic-service"

host_name = "agent-1"

check_command = "icinga"

}

EOF

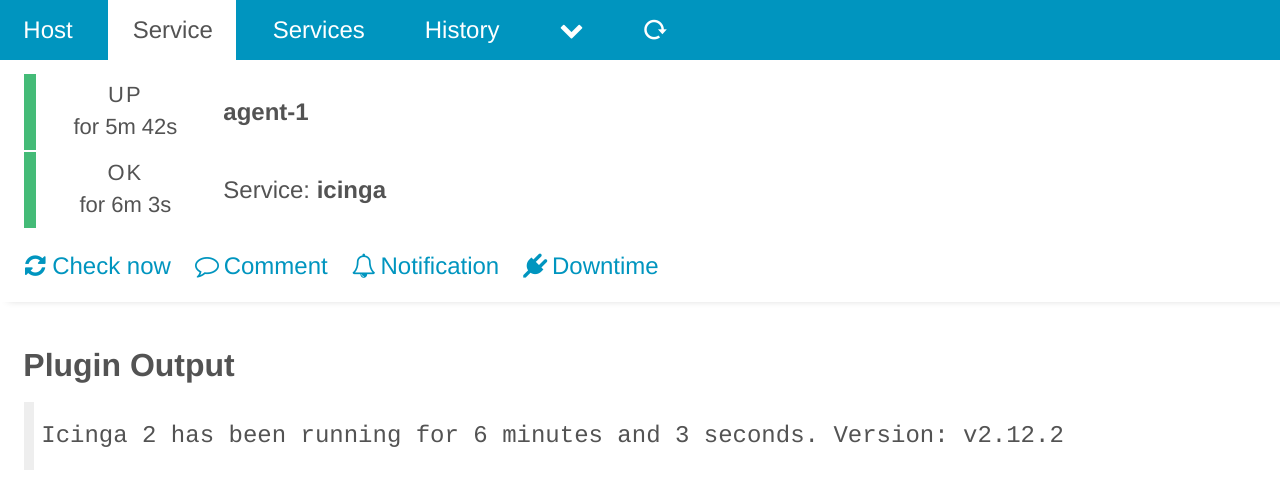

Shortly after telling the master node to reload, you should be able to see the newly created host and service in Icinga Web 2:

What has happened in the background? Yet again, the master copied the configuration from /etc/icinga2/zones.d/agent-1/ to /var/lib/icinga2/api/zones/agent-1/ and synced it to the correct nodes. In this case, these are all nodes in the target zone and all its parents zones up to and including the master zone. In this case, you will therefore find the new configuration in /var/lib/icinga2/api/zones/agent-1/ on master, satellite-1 and agent-1, but not on agent-2 nor on satellite-2.

/var/lib/icinga2/api/zones/agent-1/

`-- _etc/

`-- agent-1.conf

Agent as Command Endpoint

In contrast, we will set up agent-2 as a child of satellite-2 using the command endpoint feature. On first glance, the following looks very similar but there are some crucial differences. First, this is now put into the zone of the parent satellite as this one will schedule the checks. And second, the service now contains the additional command_endpoint attribute instructing the satellite to delegate the check execution to the actual agent.

root@master:~# cat > /etc/icinga2/zones.d/satellite-2/agent-2-endpoint.conf <<EOF

object Host "agent-2" {

import "generic-host"

address = "agent-2"

}

object Service "icinga" {

import "generic-service"

host_name = "agent-2"

check_command = "icinga"

command_endpoint = "agent-2"

}

EOF

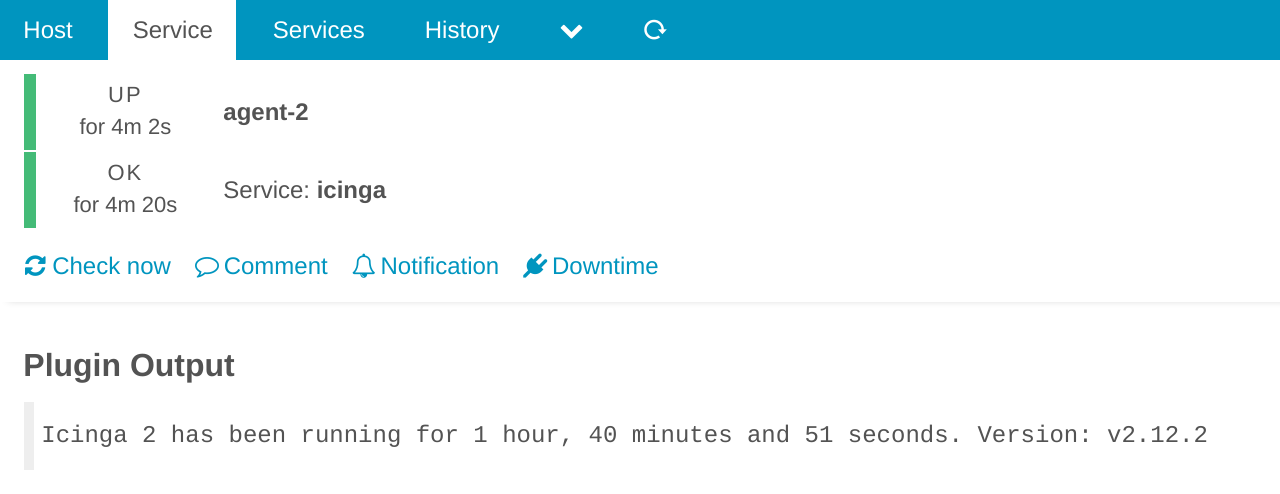

Again, after reloading Icinga 2, there should be another host and service visible in Icinga Web 2:

In fact, apart from the name, this one looks almost indistinguishable from the first one in the web interface, even though very different things happen in the background. Note that for the icinga check command, you can see a difference in the performance data: num_services_ok has the value 1 on agent-1, whereas on agent-2 this is 0. This shows that the latter is not aware of any services and just executes checks as instructed by its parent satellite-2.

Another look at the file system reveals another difference compared to the previous scenario. As the new configuration was put into the satellite-2 zone, it only ends up there in addition to the master node:

/var/lib/icinga2/api/zones/satellite-2/ (master, satellite-2)

`-- _etc/

|-- agent-2-endpoint.conf

`-- agent-2.conf

Note that no additional configuration got synced to agent-2. Even though it is now executing checks, only the global-templates zone created earlier is available locally:

/var/lib/icinga2/api/zones/ (agent-2)

`-- global-templates/

`-- _etc/

`-- templates.conf

Conclusion

We hope this post shed some light on how the configuration sync of Icinga 2 works. Now you know what to expect in /var/lib/icinga2/api/zones/ throughout you cluster. This should give you a good impression what the current state of the cluster is. It’s worth pointing out that the log files usually provide additional hints on how it got into this state. If you want to dive even deeper into this topic, check out the technical concepts documentation.

Until now, high availability was not mentioned in this post. In fact, this works very similar to what was described before. The only difference is that now each zone can have two nodes, which additionally sync between each other. For the master zone, this sync will happen only in one direction. Only one node may have configuration in /etc/icinga2/zones.d/ and is therefore considered the config master and won’t accept incoming config updates.

Note that this post only describes the sync mechanism for changes you make yourself in /etc/icinga2/zones.d/ or with the configuration package management API, for example using Icinga Director. The API additionally provides the ability to change individual config objects which works differently, but this is a story for another post.