While doing packaging for Icinga, I noticed we have a lot of YAML files describing GitLab pipelines doing very similar jobs. The same build job across different operating systems. That’s wasteful behaviour, which leads to a bigger workload when it comes to modifying these jobs. Tasks like adding new versions and especially adding new operating systems become tedious. What I’m looking for is a way to have interchangeable values for our building jobs. If I could provide different operating systems to build on via variables, my life might become a whole lot easier. Maybe I could even build and manage multiple projects from one central hub.

And while this can’t be done in a straightforward way with GitLab CI/CD variables – they can’t be used in job names, for example – I think I might have found something to help me solve this issue.

GitLab Dynamic Child Pipelines

The official GitLab documentation mentions a method for defining a job, which generates a single – or many – YAML files. Remember: YAML files are used in GitLab for running CI/CD features – they trigger pipelines in which jobs can be run. These YAML files are stored as artefacts from the generation job, and can be triggered as child pipelines. GitLab itself describes it as “[…]very powerful in generating pipelines targeting content that changed or to build a matrix of targets and architectures.”, – exactly what I’m looking for! Having to maintain many software packages in their own contained little projects starts off simple, but doing the same work over many, many projects adds up. So using Dynamic Child Pipelines would offer me (and my coworkers) a central place for managing and maintaining packaging – in a scalable way.

How do I use Dynamic Child Pipelines?

The documentation mentions templating languages like ytt or Dhall, but there is an official example provided using Jsonnet, which has led to me checking out Jsonnet and using it for this purpose.

What is Jsonnet?

Jsonnet is a templating language, intended for generating configuration files reliably, to reduce configuration drift and better manage a sprawling configuration. An extension of json, it adds features like variables, functions, loops, conditionals and imports. This allows for a fully parameterised YAML configuration, which is exactly what I’m looking for.

How is Jsonnet used to create Dynamic Child Pipelines?

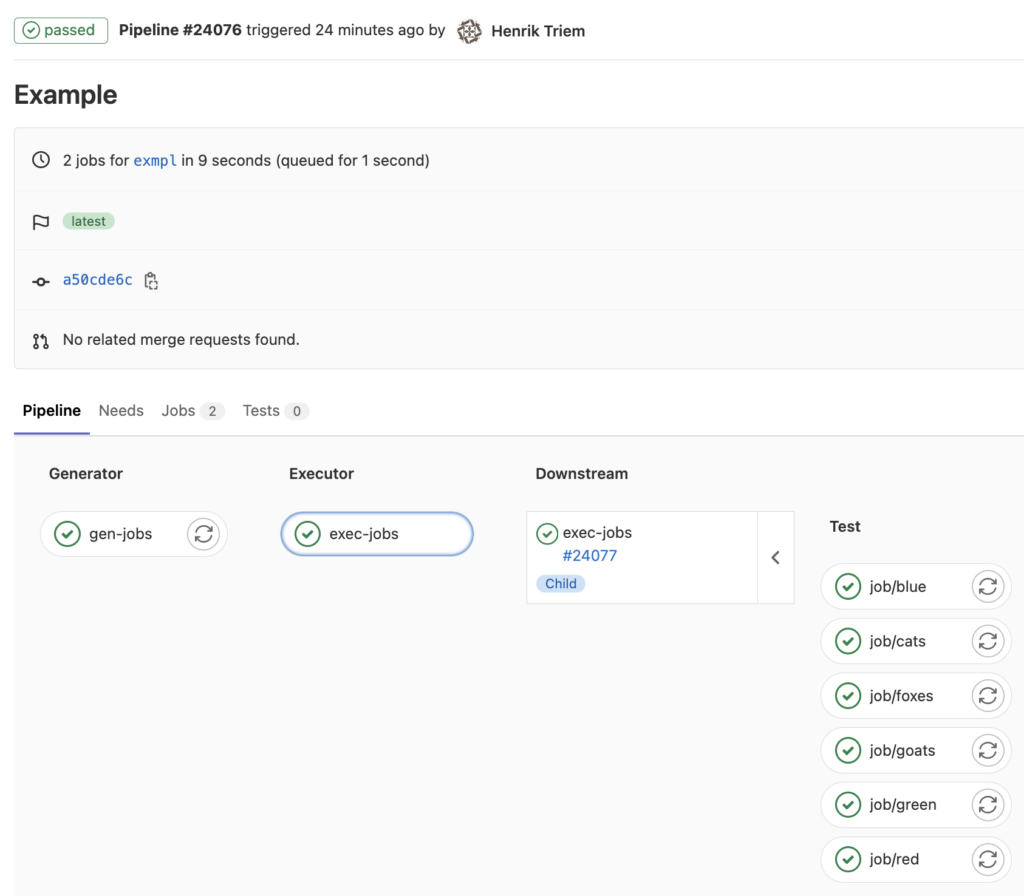

It’s pretty simple, actually. For this example, we have a GitLab project contains two files:

-

.gitlab-ci.yml, a YAML file containing our job generator. It provides Jsonnet and the entry point for our pipeline. -

blueprint.jsonnet, a Jsonnet file containing our abstract job instructions.

In our .gitlab-ci.yml, we describe in our first job downloading Jsonnet. Jsonnet is then run to generate a YAML file, which then will be stored as an artefact. The second job will take care of running all of our new jobs in the generated YAML file.

Check out this example:

stages:

- generator

- executor

gen-jobs:

stage: generator

image: alpine:latest

script:

- apk add -U jsonnet

- jsonnet blueprint.jsonnet > jobs.yml

artifacts:

paths:

- jobs.yml

exec-jobs:

stage: executor

needs:

- gen-jobs

trigger:

include:

- artifact: jobs.yml

job: gen-jobs

strategy: depend

local job(thing) =

{

image: "alpine:latest",

script: "echo I LIKE " + thing

};

local things = ['blue', 'red', 'green', 'foxes', 'cats', 'goats'];

{

['job/' + x]: job(x)

for x in things

}

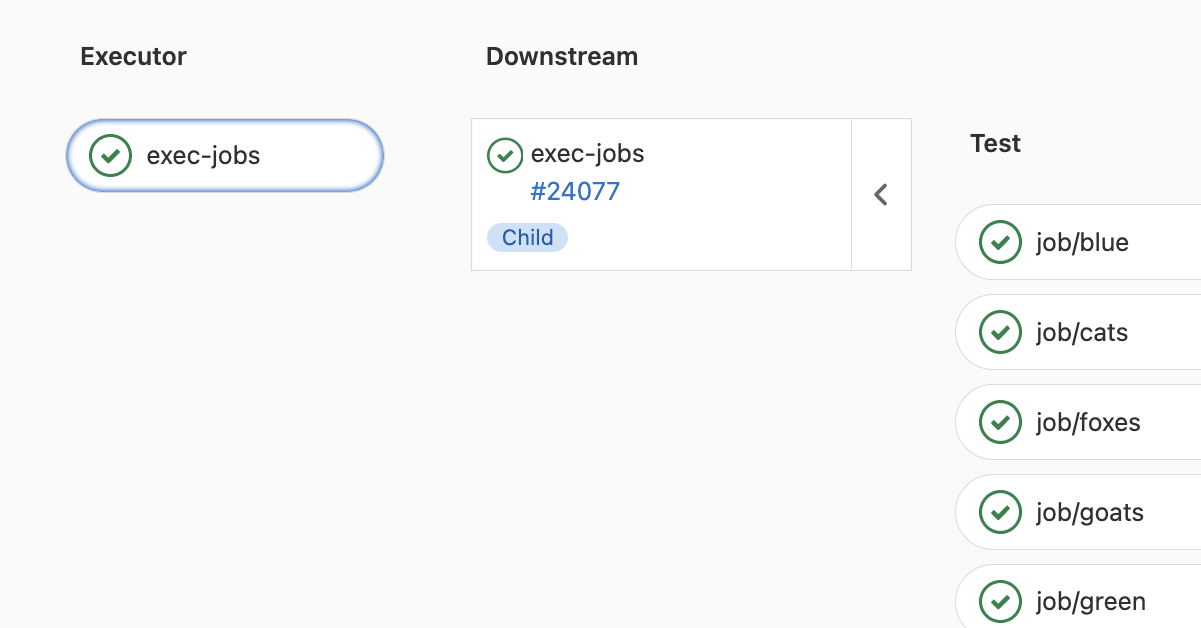

Generate jobs for anything.