Have you ever experienced configuration issues, such as notifications not being sent as expected or apply rules not matching all expected objects, probably due to an incorrectly set custom variable? Icinga 2 has several options to assist you in such situations. Last time, Julian demonstrated how to analyse such problems using the icinga2 object list command. Today I will show you how to interactively investigate your problem using the mighty Icinga 2 debug console.

Prerequisites

The debug console lets you gain live access to the entire monitoring infrastructure thus maliciously paralyse everything. Therefore, you will need an authorised API user with permission to access the console. In addition, you need some experience with Icinga 2 DSL as well, to be able to solve your problem in a non-frustrating way and also not to unwittingly mess up your Icinga 2 daemon.

Next, start the icinga2 console with the connect parameter. You can specify the API credentials via the shell environments instead of providing them directly as part of the url string. You can also specify the --sandboxed parameter at the end if you don’t intend to change anything live or don’t want to alter something accidentally. Doing so will prevent you from intentionally or unintentionally modifying things in your running Icinga 2 process.

$ ICINGA2_API_USERNAME=root ICINGA2_API_PASSWORD=icinga icinga2 console --connect 'https://localhost:5665/'

Service stuck in a pending state?

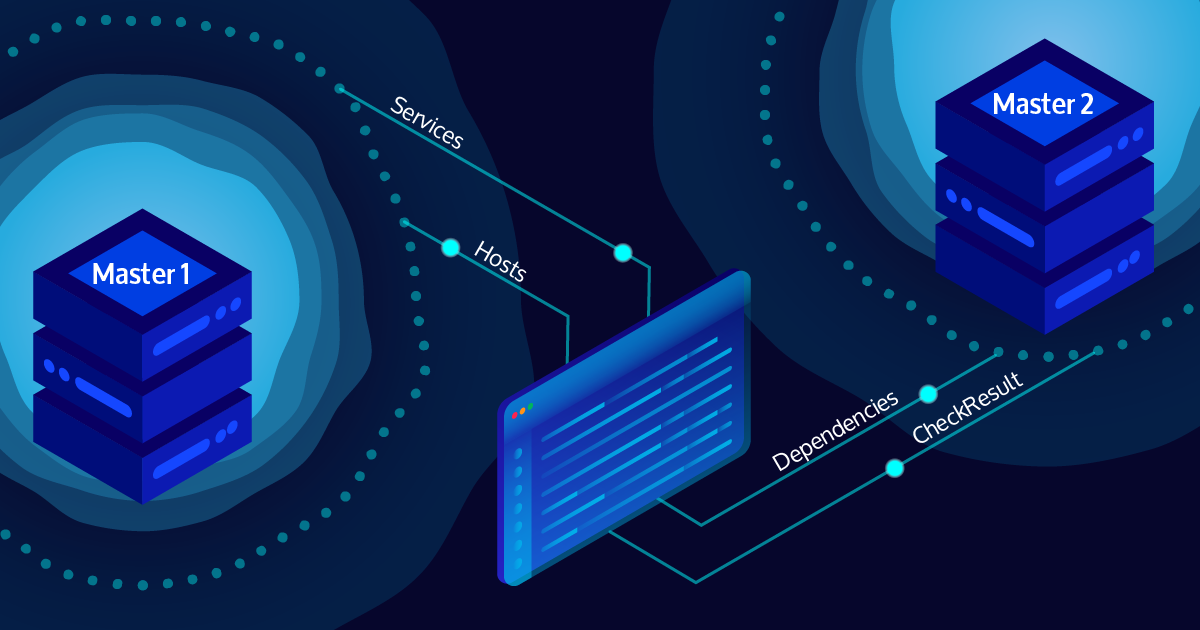

Have you ever had a strange Icinga 2 problem, be it a single node or HA setup, where one or more services got stuck in a pending state? There are several factors that can lead to such a situation, e.g. a synchronisation issue between your two masters, or your IDO is too slow to keep up with the number of checks. However, using the debug console, you can identify the problem fairly easily in most cases. You first need to check whether the service has ever been checked by Icinga 2.

<15> => var s = get_service("example-1", "realtime-load")

null

<16> => s.last_check_result

null

<17> => s.last_check

-1.000000

You can see from above that the ‘realtime-load’ service has never been checked and therefore has no check result to look at. This can only happen if either the checker has not yet been enabled or the service is within a different child zone. We can easily check this by querying the zone attribute of the service.

<18> => s.zone "agent"

In my case, the service is in “agent” zone. We can now have a look at the logs to see why the agent is no longer performing checks or retrieve the status of the endpoint directly here.

<19> => var endpoint = get_object(Endpoint, "agent") null <20> => endpoint.connected false

Well, obviously my endpoint isn’t even connected yet. However, that is just a trivial example, and if you’re using the Icinga check, you should have already noticed that the endpoint is gone.

Overdue checks

To determine how many hosts or services in your Icinga 2 setup are currently in overdue, you can do the following. It looks a bit complex and overly technical, but if you are familiar with Icinga 2 DSL it should be fairly straightforward.

<21> => var hosts = get_objects(Host).filter(h => h.last_check < get_time() - 2 * h.check_interval).map(h => h.name) null <22> => hosts.len() 277.000000 <23> => hosts [ "example-248", "example-373", "example-74", "example-148", ...

The above command searches for all hosts in your environment known by the master endpoint and whose last_check exceeds the configured check_interval by at least a factor of two. Next, we map these by their names and store the result in hosts variable. Note, these are all hosts (including those that aren’t in the master zone) that have surpassed their configured check_interval without being checked.

Alternatively, if you want to filter for hosts that are only in a specific zone, use the following expression.

<24> => var hosts = get_objects(Host).filter(h => h.last_check < get_time() - 2 * h.check_interval && h.zone == "satellite").map(h => h.name) null <25> => hosts.len() 277.000000

It is now additionally filtered using the && h.zone == "satellite" check. In my example, all overdue hosts are in the satellite zone.

Conclusion

Although, I’ve just shown you trivial examples, my intention with this blog is to give you an impression of what it is capable of. Getting familiar with it could possibly pay off at some point and save you some headaches. Here you can find all the things that you can use in the context of the Icinga 2 debug console + API.