I use NixOS by the way. And today I’m going to show you how to operate a simple Icinga setup using that operating system. I.e. a single node with checks and notifications.

In contrast to Icinga Web 2 or Redis, NixOS provides an Icinga 2 package, but no module. Practically speaking, this means you can’t just write something like services.redis.enable = true; in your NixOS configuration. Instead, a complete systemd service is required as well as the user and group icinga2 the daemon defaults to:

{ pkgs, ... }: {

users.groups.icinga2 = { };

users.users.icinga2 = {

isSystemUser = true;

group = "icinga2";

};

systemd.services.icinga2 = {

requires = [ "network-online.target" ];

serviceConfig = {

ExecStart = "+${pkgs.icinga2}/bin/icinga2 daemon --close-stdio";

Type = "notify";

NotifyAccess = "all";

KillMode = "mixed";

StateDirectory = "icinga2";

StateDirectoryMode = "0750";

RuntimeDirectory = "icinga2";

RuntimeDirectoryMode = "0750";

CacheDirectory = "icinga2";

CacheDirectoryMode = "0750";

User = "icinga2";

};

wantedBy = [ "multi-user.target" ];

};

}

This service will fail saying 'std::ifstream::open' for file '/etc/icinga2/icinga2.conf' failed with error code 2, 'No such file or directory' unless e.g. you have also created that file via the environment.etc."icinga2/icinga2.conf" NixOS setting. But I’m not going to use the latter! That option just creates/changes the main Icinga config file on disk whenever NixOS is re-provisioned via nixos-rebuild switch. So Icinga 2 sees any changes only after the next manual restart which is clearly a configuration drift. In general, NixOS admins expect a successful nixos-rebuild switch run to directly apply the whole configuration which includes restarting all affected services. But services get restarted only when some of their parts change, so we have to make icinga2.conf part of the icinga2 service:

@@ -12 +12 @@

- ExecStart = "+${pkgs.icinga2}/bin/icinga2 daemon --close-stdio";

+ ExecStart = "+${pkgs.icinga2}/bin/icinga2 daemon --close-stdio -c ${./icinga2.conf}";

The ./ in this construct is relative to the mentioned NixOS service config itself, whether it’s purely local (/etc/nixos/) or in a git(1) repository which the local config imports. I.e. an icinga2.conf has to exist next to the nix(1) file declaring systemd.services.icinga2. nixos-rebuild switch will copy that file to /nix/store/, e.g. /nix/store/8mlbb4ccj8gw764ad5vrjcrnk840n32c-icinga2.conf, and write that full path to /etc/systemd/system/icinga2.service, e.g. ExecStart=+/nix/store/g55l2yhax1gll7c61k64091dc7zgqlhk-icinga2-2.14.5/bin/icinga2 daemon --close-stdio -c /nix/store/8mlbb4ccj8gw764ad5vrjcrnk840n32c-icinga2.conf. A changed icinga2.conf file would have another hash (8mlbb4ccj8gw764ad5vrjcrnk840n32c) and hence another path. This would change the ExecStart= line of icinga2.service and force nixos-rebuild switch to restart Icinga. Apropos changing icinga2.conf:

object JournaldLogger "mainlog" {

severity = "information"

}

A logger never hurts! (Only its absence does.) This one makes Icinga log messages available under journalctl [-f] [-u icinga2.service]:

Oct 06 14:53:00 aklimov-nixos icinga2[52756]: [2025-10-06 14:53:00 +0000] information/cli: Closing console log. Oct 06 14:53:00 aklimov-nixos systemd[1]: Started icinga2.service. Oct 06 14:53:00 aklimov-nixos icinga2[52792]: Activated all objects.

But there’s not much to log, yet, so let’s add our first check:

object CheckerComponent "checker" { }

include <itl>

object Host NodeName {

check_command = "dummy"

}

include <plugins>

object Service "disk" {

host_name = NodeName

check_command = "disk"

}

Unfortunately, the standard config shipped in <plugins> tries to access undefined script variable 'PluginDir' which fails Icinga with that error message. Just like with icinga2.conf, it’s best to pass PluginDir to icinga2 daemon on the ExecStart= line, so that its change automatically restarts Icinga:

@@ -12 +12,8 @@

- ExecStart = "+${pkgs.icinga2}/bin/icinga2 daemon --close-stdio -c ${./icinga2.conf}";

+ ExecStart = "+${pkgs.icinga2}/bin/icinga2 daemon --close-stdio -c ${./icinga2.conf} -DPluginDir=${pkgs.stdenvNoCC.mkDerivation {

+ name = "PluginDir";

+ phases = [ "installPhase" ];

+ installPhase = ''

+mkdir -p $out

+ln -s ${pkgs.monitoring-plugins}/bin/* $out/

+'';

+}}";

Theoretically, even just -DPluginDir=${pkgs.monitoring-plugins}/bin would be sufficient for the disk CheckCommand. But you may also use commands requiring PluginDir with binaries being in other packages than monitoring-plugins sooner or later. So it makes sense to already create a central directory and collect all used plugins there. Just like above and in classic Linux distros. Apropos other commands, yet my setup misses a way to inform me about disk space shortage:

object NotificationComponent "notification" { }

object User "icingaadmin" {

vars.telegram_chat = 1424462536

}

object NotificationCommand "telegram" {

command = [ PluginDir + "/env_notify_telegram" ]

env = {

ENTG_TOKEN = "$telegram_token$"

ENTG_CHAT = "$telegram_chat$"

ENTG_MESSAGE = "$telegram_message$"

}

vars.telegram_message = {{{[$notification.type$] $service.name$ on $host.name$ is $service.state$!

$service.output$}}}

}

apply Notification "telegram" to Service {

command = "telegram"

users = [ "icingaadmin" ]

vars.telegram_token = "4839574812:AAFD39kkdpWt3ywyRZergyOLMaJhac60qc"

assign where true

}

Of course, PluginDir + "/env_notify_telegram" has to exist for the above to work:

@@ -17,2 +17,12 @@ mkdir -p $out

ln -s ${pkgs.monitoring-plugins}/bin/* $out/

+ln -s ${pkgs.rustPlatform.buildRustPackage {

+ name = "env_notify_telegram";

+ src = pkgs.fetchFromGitHub {

+ owner = "Al2Klimov";

+ repo = "env_notify_telegram";

+ rev = "v0.1.1";

+ hash = "sha256-CUFBPDAJjPwKM3BigxlPIzE86XIVGw+wOVs8i1hQPEo=";

+ };

+ cargoHash = "sha256-YIbIJYSeDGTt16/+3d80L/QQ3lER2igGINcBwACZbUU=";

+}}/bin/* $out/

'';

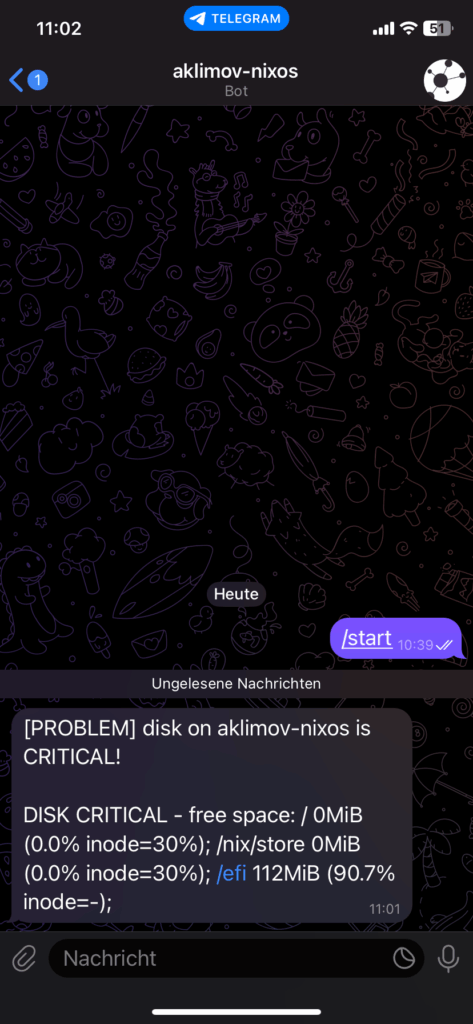

In production, I’d never write the token (4839574812:AAFD39kkdpWt3ywyRZergyOLMaJhac60qc, taken from here) directly into icinga2.conf, as it’s copied to /nix/store/ which is readable by everyone on the system! Instead, I’d deploy it as a secret. But for now I’m glad that I receive notifications directly on my phone:

How exactly Icinga notifications work is a bit out of scope here. For more on that topic please continue reading on our dedicated page.