As a developer I couldn’t imagine working without one of these three things:

- a search engine – which saves me thinking by myself

- an IDE – which saves me typing function names completely

- and continuous integration – which saves me running unit tests by myself on every pull request I make

For projects on GitHub the built-in actions should do the latter job fine in most cases. But as everything else they have limits. The more PRs, the more different tests per pull request and the longer those tests run, the longer different PRs have to wait for each other for the continuous integration to run.

Recently, while preparing Icinga v2.12.4 and v2.11.9, we ran into this problem by ourselves: we’ve made a bunch of pull requests backporting bugfixes from v2.13.0 to the mentioned versions at once. Each of the PRs built each GNU/Linux distro we support officially. And every build took tens of minutes.

My private Golang projects don’t build that long, but there was another problem: the GitHub actions matrix limit which allowed me only 256 jobs. So I could generate all combinations of $GOOS/$GOARCH and $GOARCH-specific variables, but only for a few recent Go versions. And even those almost hit my limit of minutes per month. But there was a light at the end of the tunnel…

Drone CI

Self-hosted continuous integrations like Drone have one big advantage: whoever pays the piper, calls the tune. No matrix limits, no restrictions on minutes per month and if you’re tired of waiting for pipelines to start running, feel free to connect Drone to a Kubernetes via the respective runner. But for now I’d like to keep it as simple as possible.

Prerequisites

- a GitHub account

- a machine with a public IP address (the size doesn’t matter)

- a domain pointing to the machine

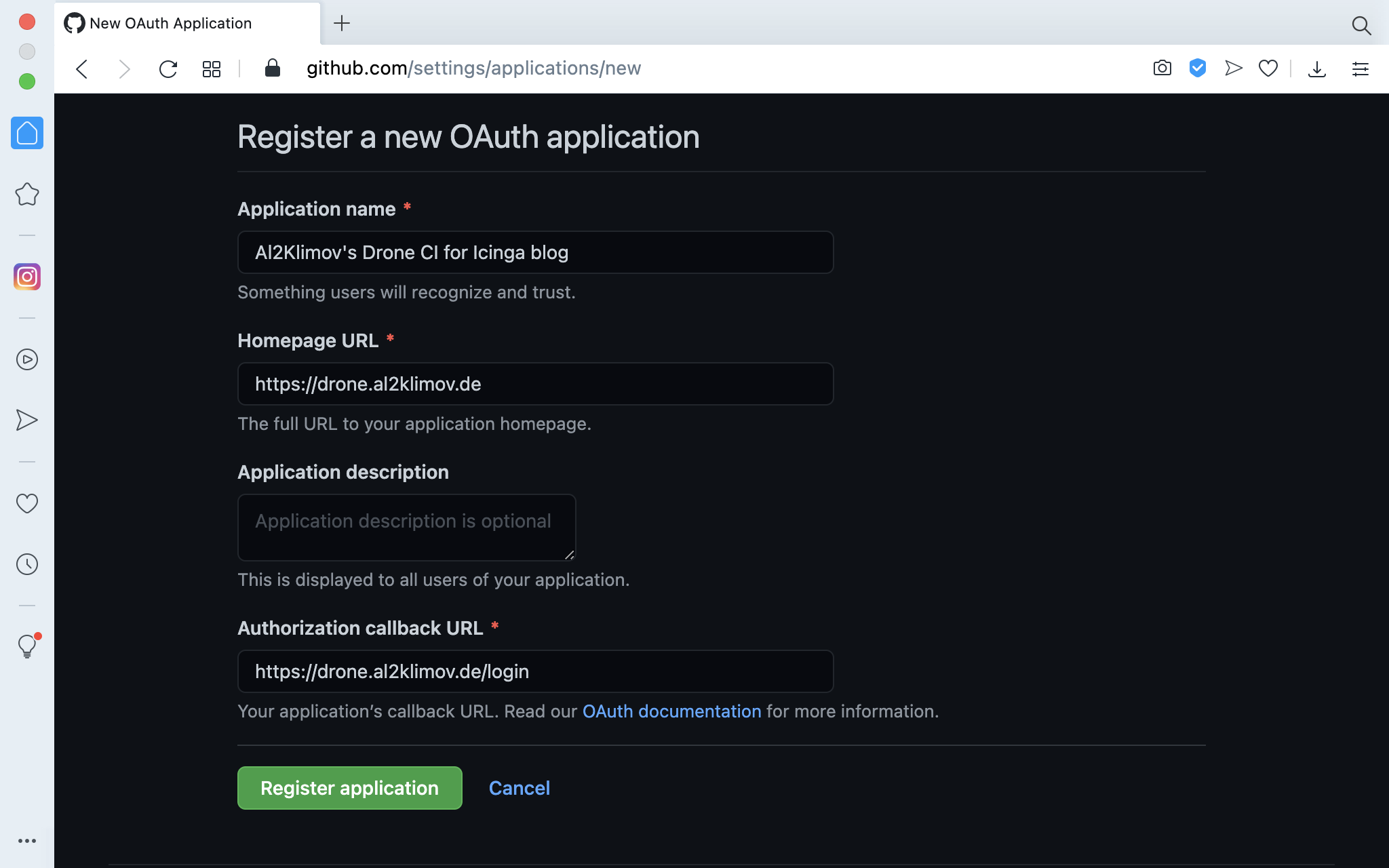

GitHub OAuth application

Create a new OAuth App here. Fill in your app’s data…

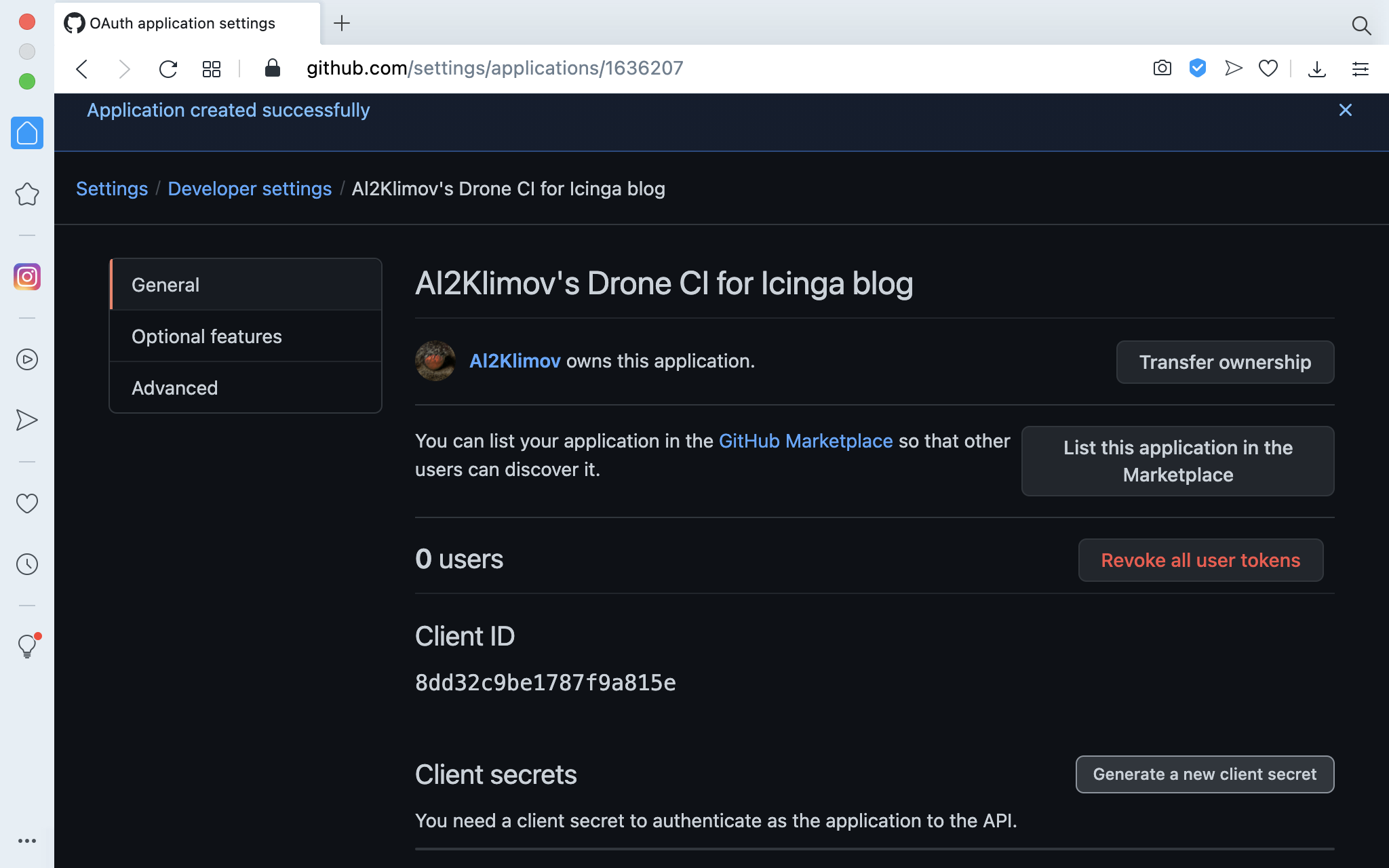

… and “Register application”. Finally “Generate a new client secret”…

… and copy it and the “Client ID”.

Drone itself

On the machine install the packages docker-ce and docker-compose as described here and create a docker-compose.yml file like this:

version: '3'

volumes:

drone: {}

services:

drone:

image: drone/drone:1

ports:

- 80:80

- 443:443

environment:

DRONE_SERVER_PROTO: https

# Copy from your OAuth App

DRONE_GITHUB_CLIENT_ID: 8dd32c9be1787f9a815e

DRONE_GITHUB_CLIENT_SECRET: 54099182d0e1c099cf8764e368a409b44c3fa7c2

# Generate with: openssl rand -hex 16

DRONE_RPC_SECRET: 90fad69e94e7ec256b9d916c3f161617

# Substitute as needed

DRONE_SERVER_HOST: drone.al2klimov.de

DRONE_USER_CREATE: username:Al2Klimov,admin:true

# Enables Let's encrypt

DRONE_TLS_AUTOCERT: 'true'

# Optional, read on

DRONE_JSONNET_ENABLED: 'true'

volumes:

- drone:/data

runner:

image: drone/drone-runner-docker:1

environment:

# Same as above

DRONE_RPC_PROTO: https

DRONE_RPC_HOST: drone.al2klimov.de

DRONE_RPC_SECRET: 90fad69e94e7ec256b9d916c3f161617

# One job at a time not to blow up the machine

DRONE_RUNNER_CAPACITY: '1'

DRONE_RUNNER_MAX_PROCS: '1'

volumes:

- /var/run/docker.sock:/var/run/docker.sock

Finally, start it by running docker-compose up -d in the same directory as docker-compose.yml.

It’s alive!™

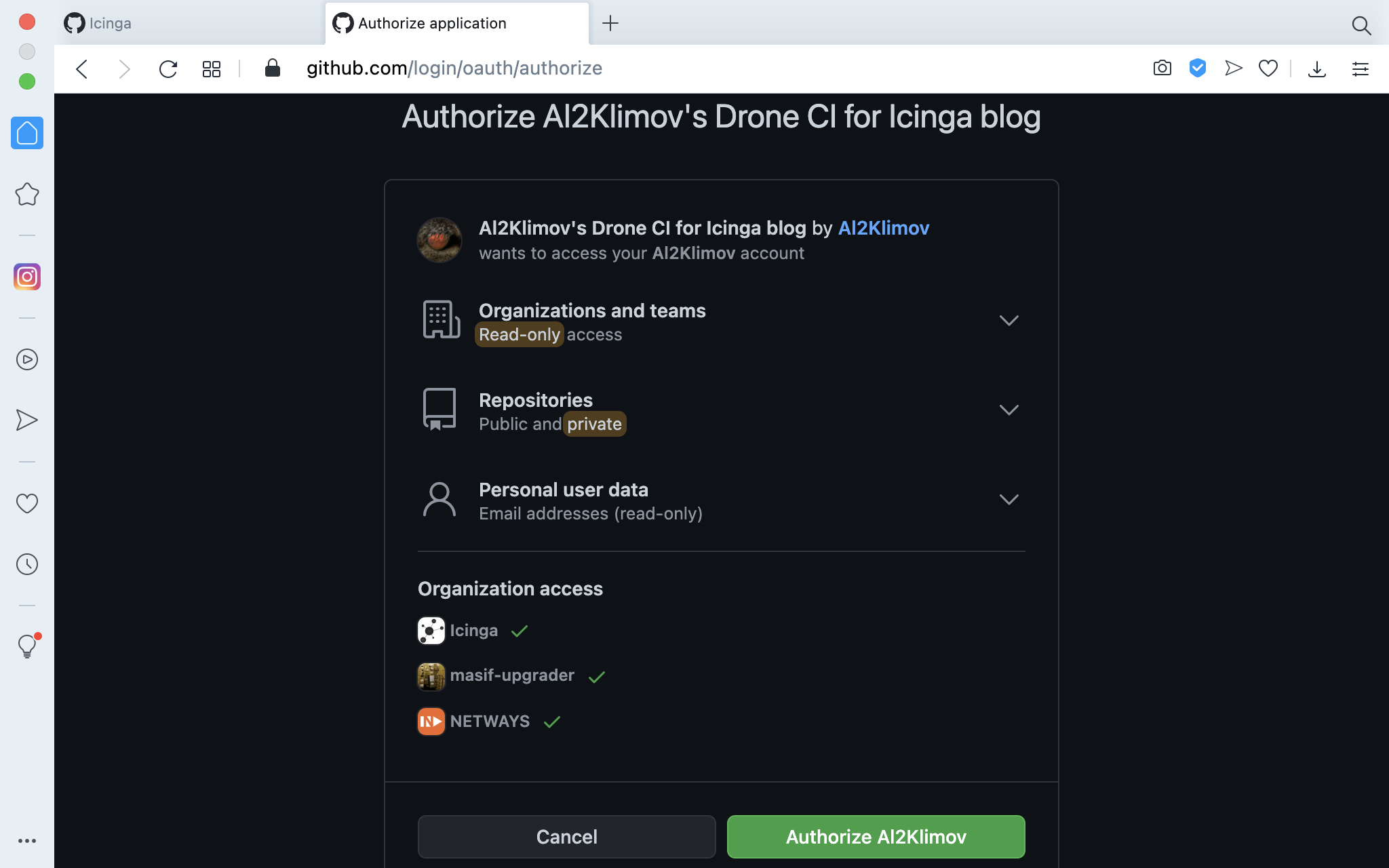

Once you visit the domain in your web browser the first time, you have to authorize the OAuth application:

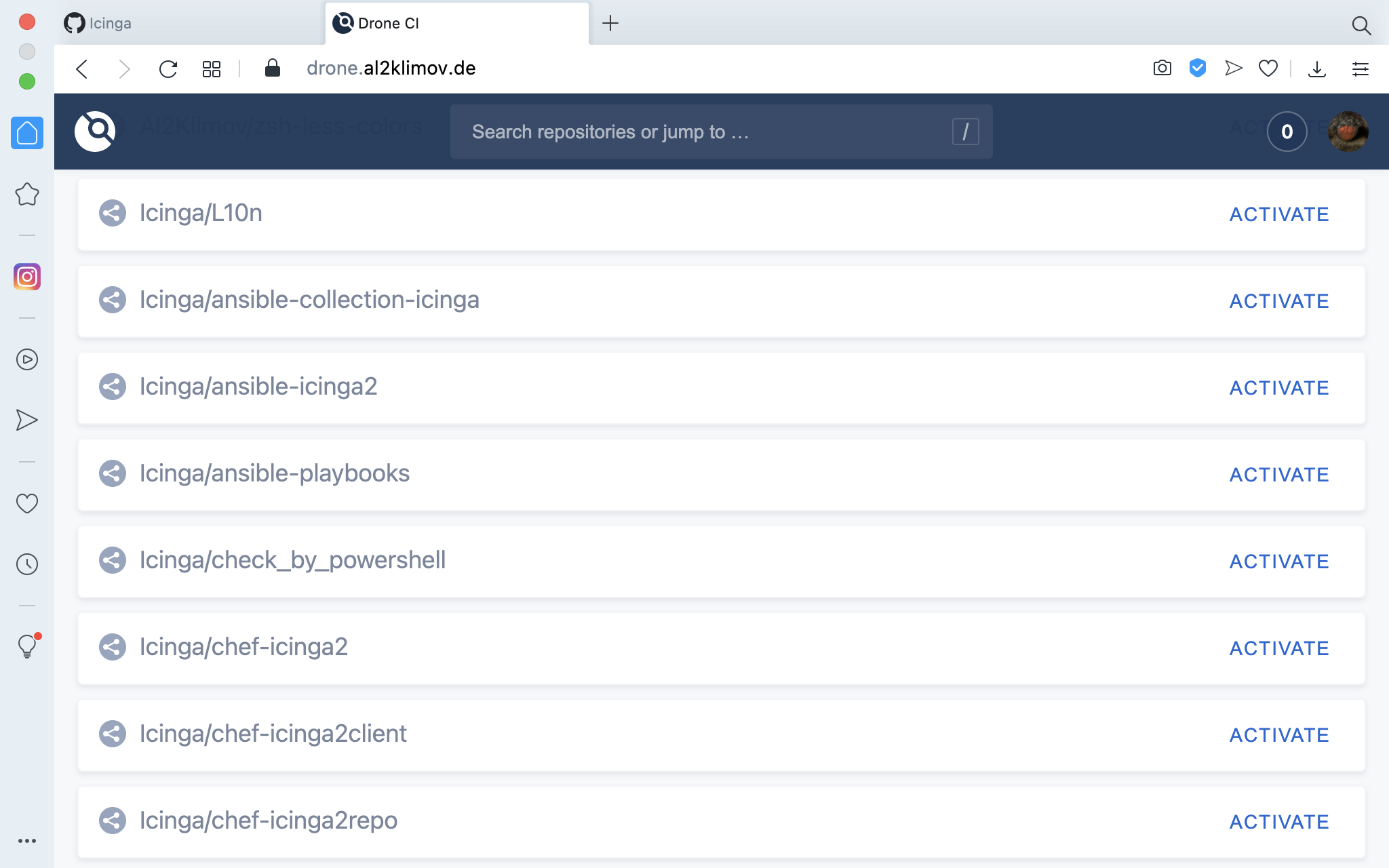

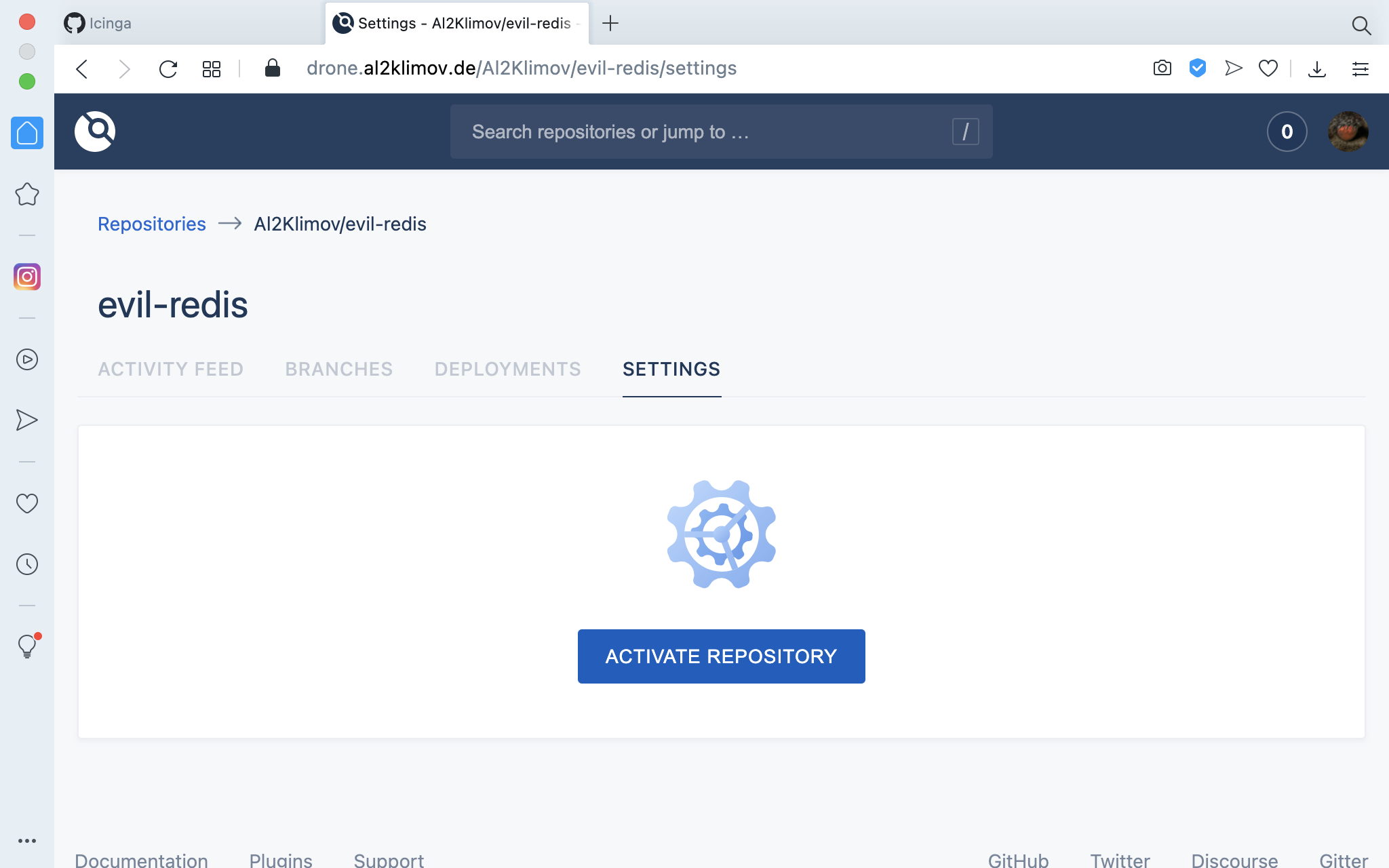

Then you can choose any of your projects…

… and activate it:

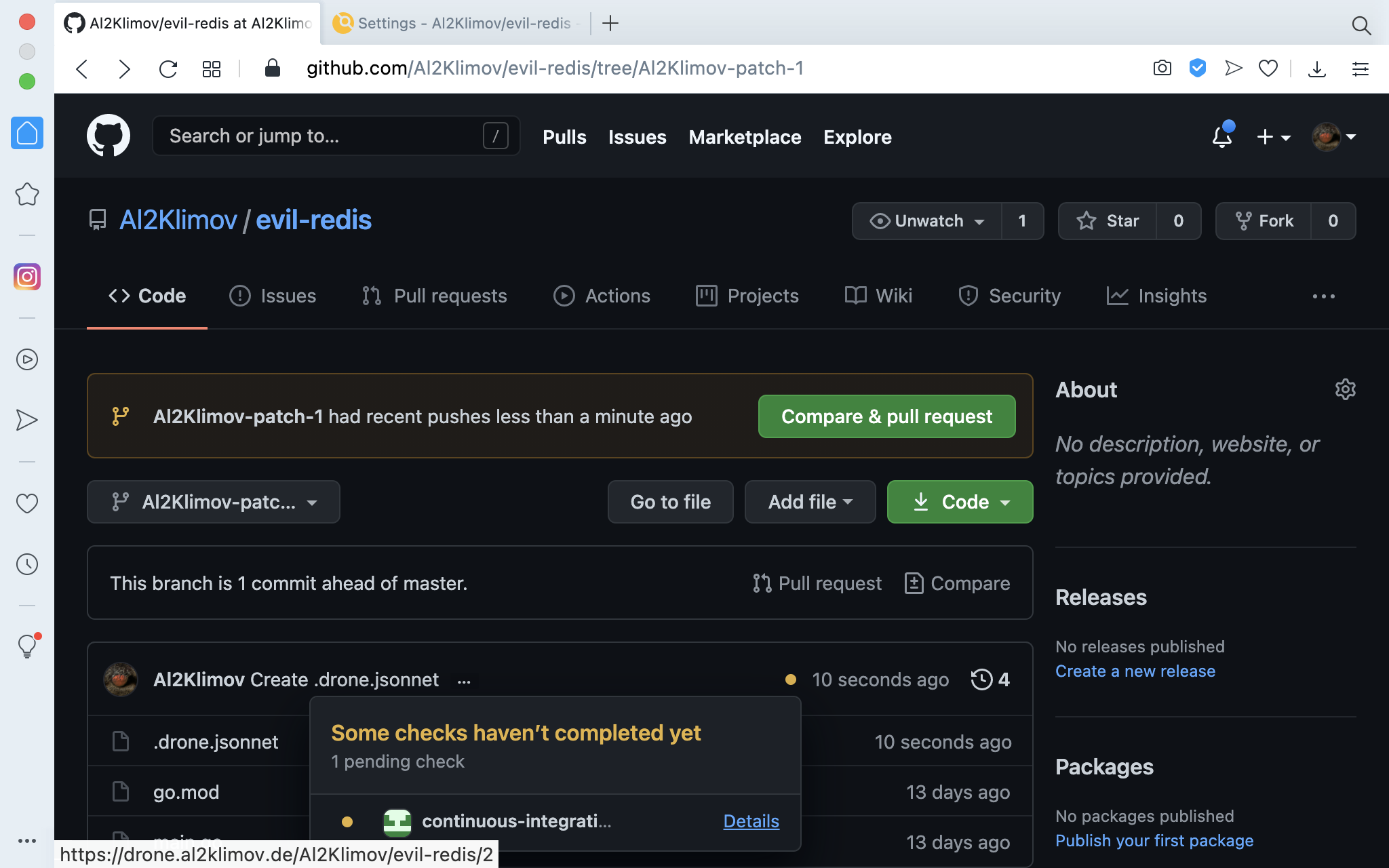

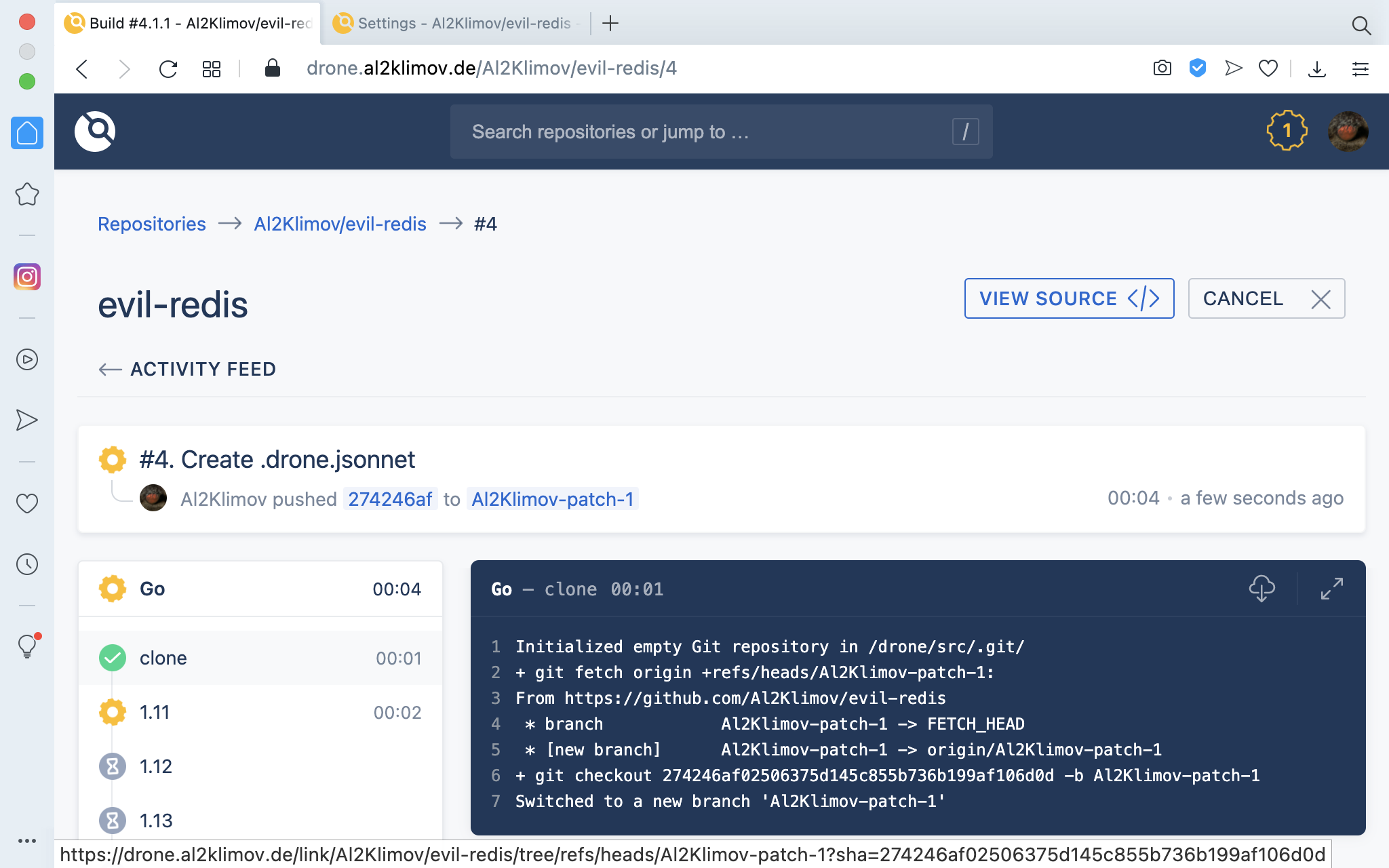

Once done, all branches and PRs are being tested as specified:

Jsonnet

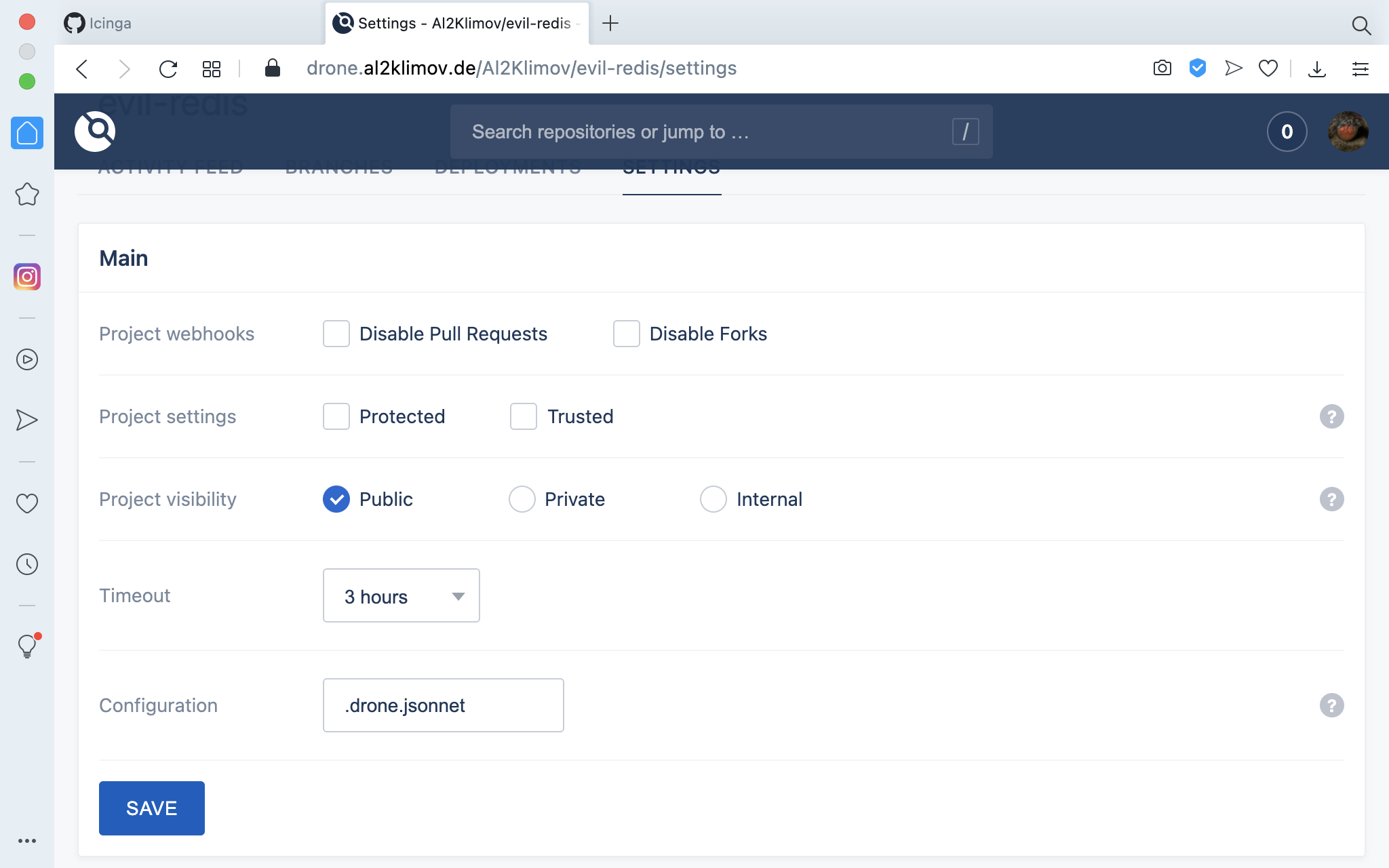

Enabling Jsonnet in the Docker Compose file above and in the project settings…

… allows you to reuse declarations even more flexibly than with YaML anchors:

local newest = 116;

local ppc64 = function(go) [ [[ "GOPPC64", "power8" ]], [[ "GOPPC64", "power9" ]] ];

local mips = function(go) [ [[ "GOMIPS", "hardfloat" ]], [[ "GOMIPS", "softfloat" ]] ];

local mips64 = function(go) [ [[ "GOMIPS64", "hardfloat" ]], [[ "GOMIPS64", "softfloat" ]] ];

local gover(i) = std.floor(i / 100) + "." + std.mod(i, 100);

local env2cmd(e) = std.join("", [kv[0] + "=" + kv[1] + " " for kv in e]);

local machines(go) = (if go >= 112 then [

{ GOOS: "aix", GOARCHs: [ "ppc64" ] }

] else [ ]) + [

{ GOOS: "android", GOARCHs: [ "386", "amd64", "arm", "arm64" ] },

{ GOOS: "darwin", GOARCHs: [ "amd64", "arm64" ] },

{ GOOS: "dragonfly", GOARCHs: [ "amd64" ] },

{ GOOS: "freebsd", GOARCHs: [ "386", "amd64", "arm" ] }

] + (if go >= 113 then [

{ GOOS: "illumos", GOARCHs: [ "amd64" ] }

] else [ ]) + (if go >= 116 then [

{ GOOS: "ios", GOARCHs: [ "arm64" ] },

] else [ ]) + [

{ GOOS: "js", GOARCHs: [ "wasm" ] },

{

GOOS: "linux",

GOARCHs: [

"386", "amd64", "arm", "arm64", "ppc64", "ppc64le",

"mips", "mipsle", "mips64", "mips64le"

] + (if go >= 114 then [ "riscv64" ] else [ ]) + [

"s390x"

]

},

{ GOOS: "netbsd", GOARCHs: [ "386", "amd64", "arm" ] },

{

GOOS: "openbsd",

GOARCHs: [ "386", "amd64", "arm" ] + (if go >= 113 then [ "arm64" ] else [ ])

},

{ GOOS: "plan9", GOARCHs: [ "386", "amd64", "arm" ] },

{ GOOS: "solaris", GOARCHs: [ "amd64" ] },

{ GOOS: "windows", GOARCHs: [ "386", "amd64" ] }

];

local envs = {

"386": function(go) [

[[ "GO386", if go >= 116 then "softfloat" else "387" ]],

[[ "GO386", "sse2" ]]

],

arm: function(go) [ [[ "GOARM", "5" ]], [[ "GOARM", "6" ]], [[ "GOARM", "7" ]] ],

mips: mips,

mipsle: mips,

mips64: mips64,

mips64le: mips64,

ppc64: ppc64,

ppc64le: ppc64,

wasm: function(go) [ [ ], [[ "GOWASM", "satconv" ]], [[ "GOWASM", "signext" ]] ]

};

[

{

kind: "pipeline",

type: "docker",

name: "Go",

steps: [

{

name: gover(go),

image: "golang:" + gover(go),

commands: [

env2cmd([

[ "GOOS", os.GOOS ],

[ "GOARCH", GOARCH ]

] + env + [

[ "CGO_ENABLED", CGO_ENABLED ]

]) + "go build ./..."

for os in machines(go)

for GOARCH in os.GOARCHs

for env in (if std.objectHas(envs, GOARCH) then envs[GOARCH](go) else [ [ ] ])

for CGO_ENABLED in [ 0, 1 ]

] + [

"go test -race -v ./..."

] + (if go == newest then [

"bash -exo pipefail -c 'FILES=\"$(gofmt -d -e .)\"; cat <<<\"$FILES\"; test -z \"$FILES\"'"

] else [ ])

}

for go in std.range(111, newest)

]

}

]

Try it yourself!

For the ones of you who need auto-scaling we already provide a managed Kubernetes and for the others we have the NWS cloud.