Two weeks ago, Icinga 2 Config Sync: Behind the Scenes explained how the config sync in Icinga 2 works and how you can look behind the scenes. Today, we will put our knowledge from that post to the test and try to manually replicate the config sync. The most important takeaways will be recapped in this post, but if you are interested and have the time, the other post is also worth a read.

So why do we want to do this manually when Icinga can do it automatically for us? First of all, we do it as a finger exercise and to see how much easier it is to let Icinga do the job. However, there may be situations where you could find this useful in practice, for example if you have some especially sensitive host, where you don’t want automatic config changes but instead want to review every change by hand.

Test Setup

We will use the same cluster in this post as in the previous one: a single master node with two satellite zones with one agent each. These two agents are used to demonstrate how the config has to be distributed with and without use of the command endpoint feature. In contrast to the previous post, you might want to set accept_config = false in /etc/icinga2/features-enabled/api.conf throughout the cluster. Leaving this enabled is fine though, as it won’t interfere with anything in this post.

The following config snippets assume that host objects for agent-1 and agent-2 already exist, as well as a fairly common generic-service template. If these don’t exist, just add them to each snippet that references them.

Agent with Local Scheduling

So let’s start by adding a new service on agent-1. On this agent, we want to perform both the check scheduling and execution locally, therefore the config of course has to be added to the agent itself. Since this is just a proof of concept, we just append all our configuration directly to /etc/icinga2/icinga.conf. In a real-world setup you would want to structure your configuration in a more meaningful way.

root@agent-1:~# cat >> /etc/icinga2/icinga2.conf <<EOF

object Service "load" {

import "generic-service"

host_name = "agent-1"

check_command = "load"

}

EOF

root@agent-1:~# systemctl reload icinga2

If we now take a look at Icinga Web 2, we won’t find this new service, even though we can see in the logs of agent-1 that checks are executed. This is because master is not aware of the service yet as we didn’t let Icinga sync the definition. So to fix this, we add the same object definition on master. Note there is an additional line explicitly specifying the zone of the object in order to prevent the node from scheduling checks locally.

root@master:~# cat >> /etc/icinga2/icinga2.conf <<EOF

object Service "load" {

import "generic-service"

host_name = "agent-1"

check_command = "load"

zone = "agent-1"

}

EOF

root@master:~# systemctl reload icinga2

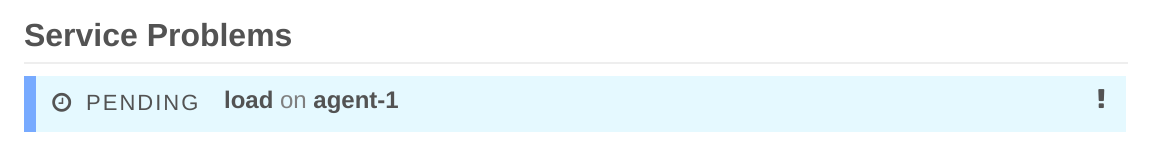

If we take another look at the web interface, we can report partial success: the service shows up but remains in the PENDING state and is shown as overdue (as symbolized by the small clock icon):

We would be done now if agent-1 was directly connected to master. However, in this setup, there is satellite-1 in between and therefore has to also be aware of the service to forward messages properly. To achieve this, we add the very same config snippet on satellite-1:

root@satellite-1:~# cat >> /etc/icinga2/icinga2.conf <<EOF

object Service "load" {

import "generic-service"

host_name = "agent-1"

check_command = "load"

zone = "agent-1"

}

EOF

root@satellite-1:~# systemctl reload icinga2

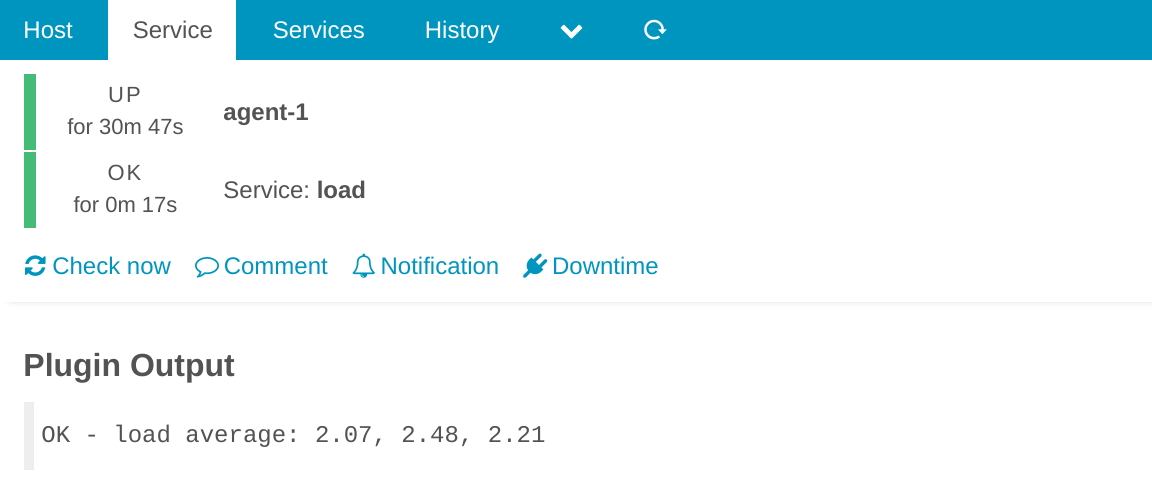

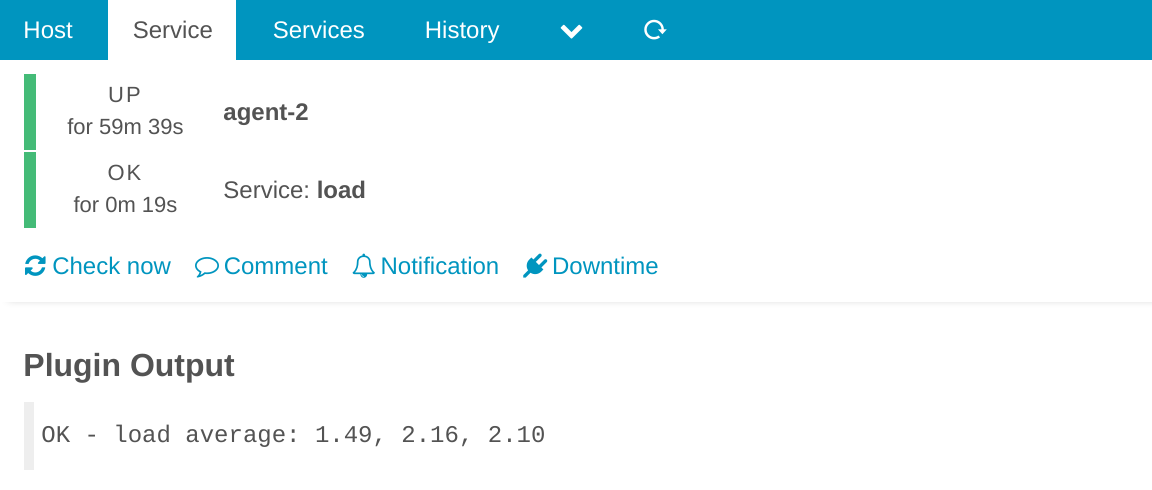

Indeed, this was the only missing part, now the service is properly checked and the results show up as they should:

Agent as Command Endpoint

Next, we want to add a service that is checked on agent-2 using the command endpoint feature, i.e. let satellite-2 schedule the checks and delegate the execution to agent-2. Let’s start by adding a service object on master (the order in which you add the config across the nodes doesn’t matter, you just have to do it on all that need it). Note that the zone of the object is now the parent zone of the endpoint we want to schedule on, in this case satellite-2.

root@master:~# cat >> /etc/icinga2/icinga2.conf <<EOF

object Service "load" {

import "generic-service"

host_name = "agent-2"

check_command = "load"

command_endpoint = "agent-2"

zone = "satellite-2"

}

EOF

root@master:~# systemctl reload icinga2

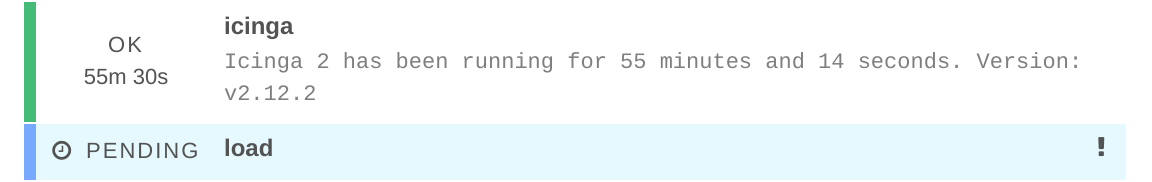

Just like before, this will create an overdue service as the config is not yet consistent across the cluster:

To fix this, the same config snippet has to be added on satellite-2. Here you could also omit the explicit zone name as it’s the local zone and thus the default value.

root@satellite-2:~# cat >> /etc/icinga2/icinga2.conf <<EOF

object Service "load" {

import "generic-service"

host_name = "agent-2"

check_command = "load"

command_endpoint = "agent-2"

zone = "satellite-2"

}

EOF

root@satellite-2:~# systemctl reload icinga2

Even though we have added nothing on agent-2, this service is now properly checked thanks to the command endpoint feature:

So if satellite-2 didn’t exist and agent-2 was a direct child of master, there would be no need to sync configuration at all if all check commands needed are already defined in the Icinga Template Library. So for a small cluster with only to levels, this gives a nice setup with fewer moving parts.